Learn how to build a web scraper in Python that crawls an entire website and extracts all the important data through web scraping.

Web scraping is about extracting data from the Web. Specifically, a web scraper is a tool that performs web scraping and is generally represented by a script. Python is one of the easiest and most reliable scripting languages available. Also, it comes with a wide variety of web scraping libraries. This makes Python the perfect programming language for web scraping. In detail, Python web scraping takes only a few lines of code.

In this tutorial, you will learn everything you need to know to build a simple Python scraper. This application will go through an entire website, extracting data from each page. Then, it will save all the data we scraped with Python in a CSV file. This tutorial will help you understand which are the best Python data scraping libraries, which ones to adopt, and how to use them. Follow this step-by-step tutorial and learn how to build a web-scraping Python script.

Table of content:

Prerequisites

Best Python Web Scraping Libraries

Building a Web Scraper in Python

Conclusion

FAQs

Prerequisites

To build a Python web scraper, you need the following prerequisites:

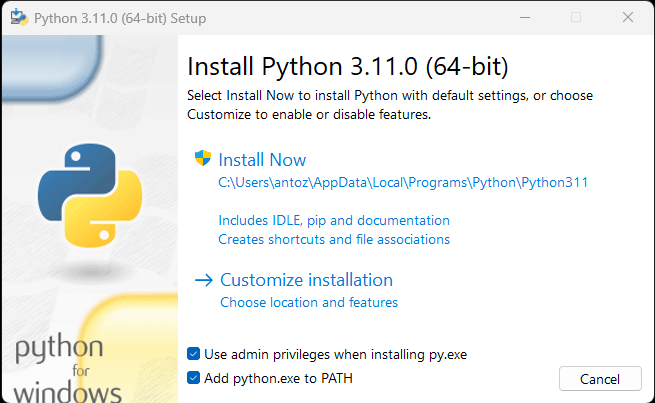

If you do not have Python installed on your computer, you can follow the first link above to download it. If you are a Windows user, make sure to mark the “Add python.exe to PATH” checkbox while installing Python, as below:

The Python for Windows installation window

This way, Windows will automatically recognize the python and pip commands in the terminal. In detail, pip is a package manager for Python packages. Note that pip is included by default in Python version 3.4 or later. So, you do not have to install it manually.

You are now ready to build your first Python web scraper. But first, you need a Python web scraping library!

Best Python Web Scraping Libraries

You can build a web scraping script from scratch with Python vanilla, but this is not the ideal solution. After all, Python is well known for its wide selection of libraries available. In detail, there are several web scraping libraries to choose from. Let’s now have a look at the most important ones!

Requests

The requests library allows you to perform HTTP requests in Python. In detail, requests makes sending HTTP requests easy, especially compared to the standard Python HTTP libraries. requests plays a key role in a Python web scraping project. This is because to scrape the data contained in a web page, you first have to retrieve it via an HTTP GET request. Also, you may have to perform other HTTP requests to the server of the target website.

You can install requests with the following pip command:

pip install requests

Beautiful Soup

The Beautiful Soup Python library makes scraping information from web pages easier. In particular, Beautiful Soup works with any HTML or XML parser and provides everything you need to iterate, search, and modify the parse tree. Note that you can use Beautiful Soup with html.parser, the parser that comes with the Python Standard Library and allows you to parse HTML text files. In detail, you can use Beautiful Soup to traverse the DOM and extract the data you need from it.

You can install Beautiful Soup with the pip as follows:

pip install beautifulsoup4

Selenium

Selenium is an open-source, advanced, automated testing framework that allows you to execute operations on a web page in a browser. In other words, you can use Selenium to instruct a browser to perform certain tasks. Note that you can also use Selenium as a web scraping library because of its headless browser capabilities. If you are not familiar with this concept, a headless browser is a web browser that runs without a GUI (Graphical User Interface). If configured in headless mode, Selenium will run the browser behind the scenes.

Thus, the web pages visited in Selenium are rendered in a real browser, which is able to run JavaScript. As a result, Selenium allows you to scrape websites that depend on JavaScript. Keep in mind that you cannot achieve this with requests or any other HTTP client. This is because you need a browser to run JavaScript, whereas requests simply enables you to perform HTTP requests.

Selenium equips you with everything you need to build a web scraper, without the need for other libraries. You can install it with the following pip command:

pip install selenium

Building a Web Scraper in Python

Let’s now learn how to build a web scraper in Python. The goal of this tutorial is to learn how to extract all quote data contained in the Quotes to Scrape website. For each quote, you will learn how to scrape the text, author, and list of tags.

But first, let’s take a look at the target website. This is what a web page of Quotes to Scrape looks like:

A general view of Quotes to Scrape

As you can see, Quotes to Scrape is nothing more than a sandbox for web scraping. Specifically, it contains a paginated list of quotes. The Python web scraper you are going to build will retrieve all the citations contained on each page and return them as CSV data.

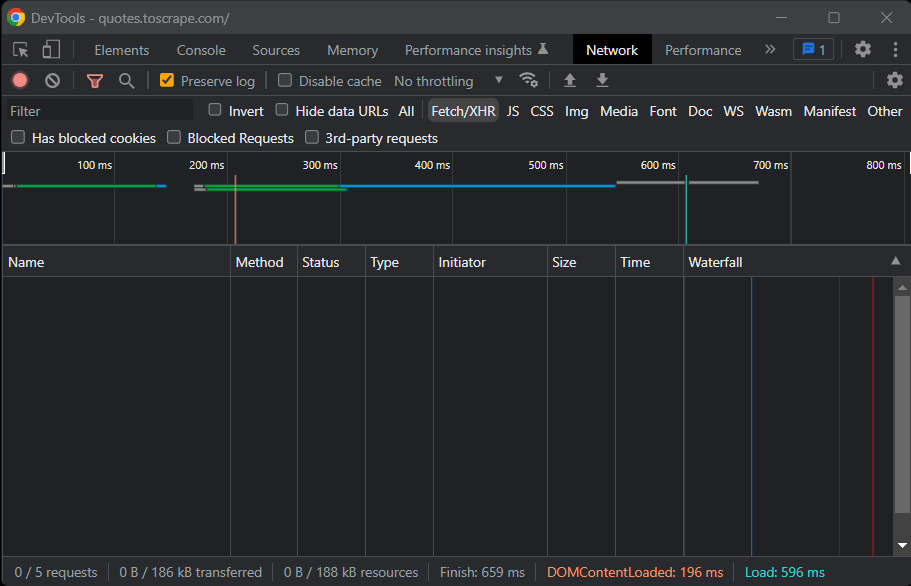

Now, it is time to understand what are the best Python web scraping libraries to accomplish the goal. As you can note in the Network tab of the Chrome DevTools window below, the target website does not perform any Fetch/XHR request.

Note that the Fetch/XHR section is empty

In other terms, Quotes to Scrape does not rely on JavaScript to retrieve data rendered in web pages. This is a common situation for most server-rendered websites. Since the target website does not rely on JavaScript to render the page or retrieve data, you do not need Selenium to scrape it. You can still use it, but it is not required.

As you learned before, Selenium opens web pages in a browser. Since this takes time and resources, Selenium introduces a performance overhead. You can avoid this by using Beautiful Soup together with Requests. Let’s now learn how to build a simple Python web scraping script to retrieve data from a website with Beautiful Soup.

Getting started

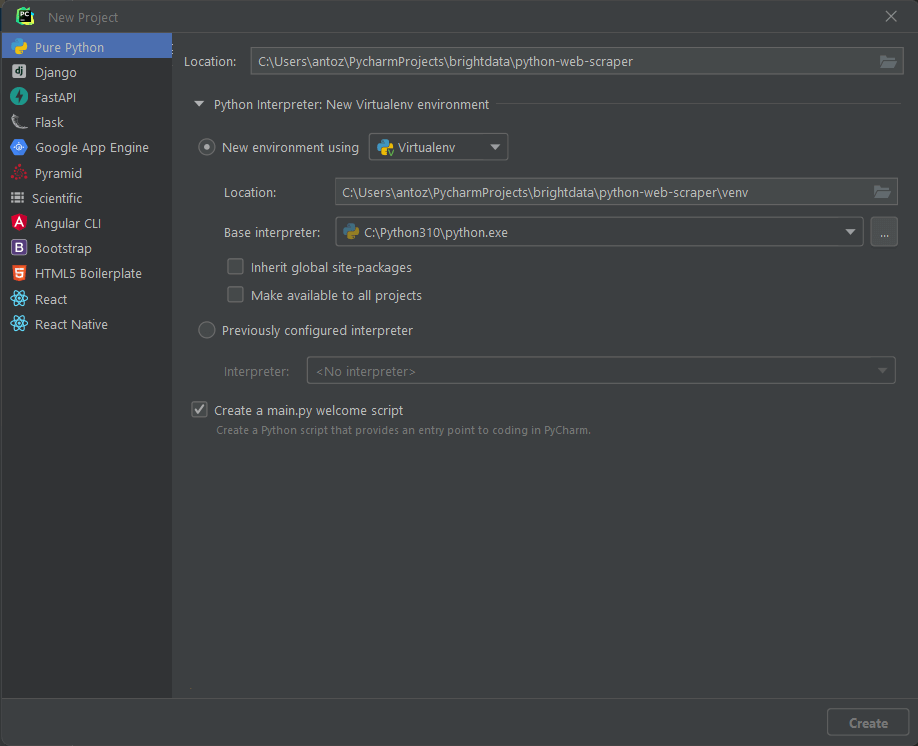

Before starting to write the first lines of code, you need to set up your Python web scraping project. Technically, you only need a single .py file. However, using an advanced IDE (Integrated Development Environment) will make your coding experience easier. Here, you are going to learn how to set up a Python project in PyCharm 2022.2.3, but any other IDE will do.

First, open PyCharm and select “File > New Project…”. In the “New Project” popup window, select “Pure Python” and initialize your project.

The “New Project” PyCharm popup window

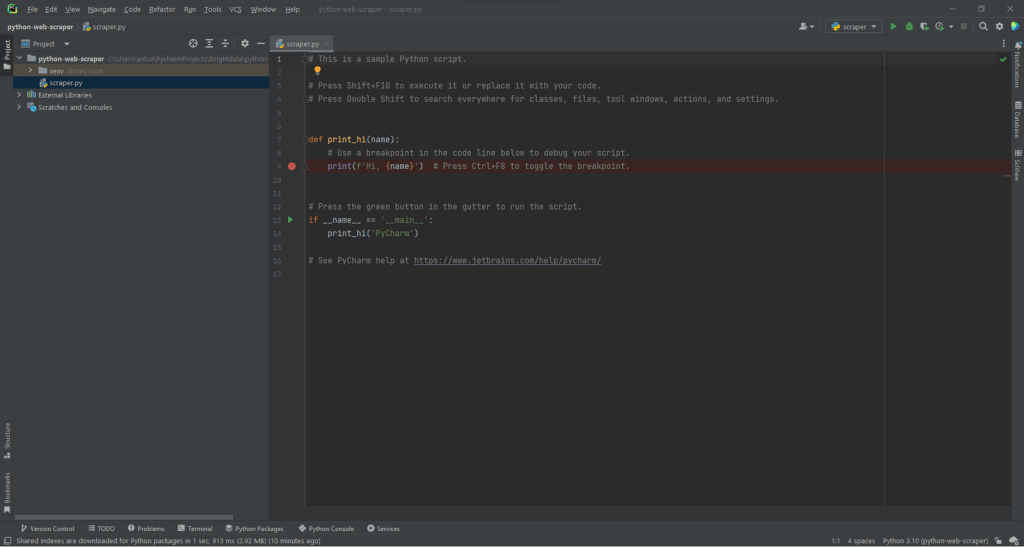

For example, you can call your project python-web-scraper. Click “Create” and you will now have access to your blank Python project. By default, PyCharm will initialize a main.py file. For the sake of clarity, you can rename it to scraper.py. This is what your project will now look like:

The python-web-scraping Python blank project in PyCharm

As you can see, PyCharm automatically initializes a Python file for you. Ignore the content of this file and delete each line of code. This way, you will start from scratch.

Now, it is time to install the project’s dependencies. You can install Requests and Beautiful Soup by launching the following command in the terminal:

pip install requests beautifulsoup4

This command will install both libraries at once. Wait for the installation process to complete. You are now ready to you use Beautiful Soup and Requests to build your web crawler and scraper in Python. Make sure of importing the two libraries by adding the following lines to the top of your scraper.py script file:

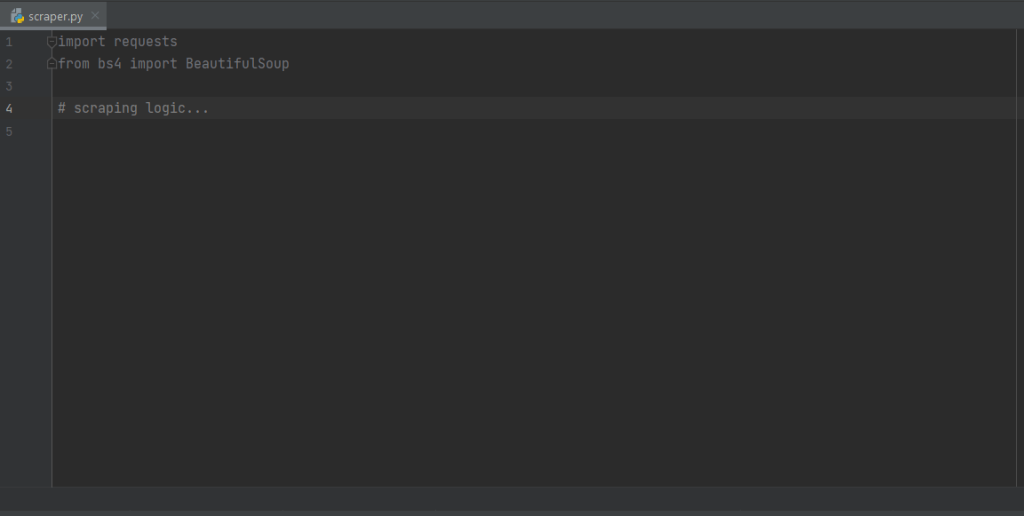

import requests

from bs4 import BeautifulSoup

PyCharm will show these two lines in gray because the libraries are not used in the code. If it underlines them in red, it means that something went wrong during the installation process. In this case, try to install them again.

The current scraper.py file

This is what your scraper.py file should now look like. You are now ready to start defining the web scraping logic.

Connecting to the target URL to scrape

The first thing to do in a web scraper is to connect to your target website. First, retrieve the complete URL of the page from your web browser. Make sure to copy also the http:// or https:// HTTP protocol section. In this case, this is the entire URL of the target website:

https://quotes.toscrape.com

Now, you can use requests to download a web page with the following line of code:

page = requests.get('https://quotes.toscrape.com')

This line simply assigns the result of the request.get() method to the variable page. Behind the scene, request.get() performs a GET request using the URL passed as a parameter. Then, it returns a Response object containing the server response to the HTTP request.

If the HTTP request is executed successfully, page.status_code will contain 200. This is because the HTTP 200 OK status response code indicates that the HTTP request was executed successfully. A 4xx or 5xx HTTP status code will represent an error. This may happen for several reasons, but keep in mind that most websites block requests that do not contain a valid User-Agent header. Specifically, the User-Agent request header is a string that characterizes the application, and operating system version from where a request comes from. Learn more about User-Agents for web scraping.

You can set a valid User-Agent header in requests as follows:

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/107.0.0.0 Safari/537.36'

}

page = requests.get('https://quotes.toscrape.com', headers=headers)

requests will now execute the HTTP request will the headers passed as a parameter.

What you should pay attention to is the page.text property. This will contain the HTML document returned by the server in string format. Feed the text property to Beautiful Soup to extract data from the web page. Let’s learn how.

Extracting data with the Python web scraper

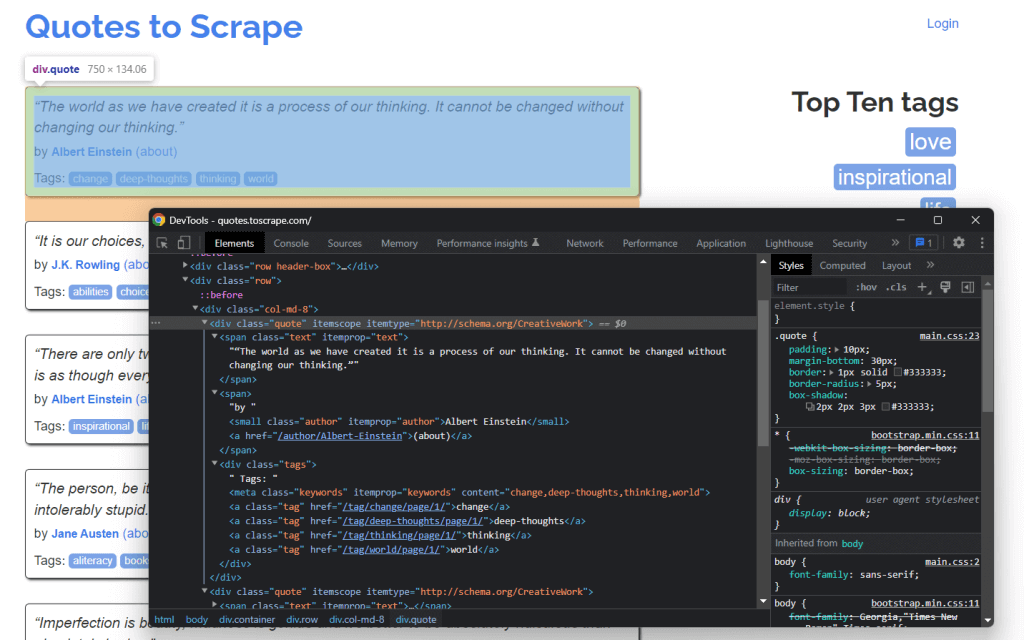

To extract data from a web page, you first have to identify the HTML elements that contain the data you are interested in. Specifically, you have to find the CSS selectors required to extract these elements from the DOM. You can achieve this by using the development tools offered by your browser. In Chrome, right-click on the HTML element of interest and select Inspect.

Inspecting the quote HTML element in Chrome DevTools

As you can see here, the quote <div> HTML element is identified by quote class. This contains:

The quote text in a

<span>HTML elementThe author of the quote in a

<small>HTML elementA list of tags in a

<div>element, each contained in<a>HTML element

In detail, you can extract this data using the following CSS selectors on .quote:

.text.author.tags .tag

Let’s now learn how to achieve this with Beautiful Soup in Python. First, let’s pass the page.text HTML document to the BeautifulSoup() constructor:

soup = BeautifulSoup(page.text, 'html.parser')

The second parameter specifies the parser that Beautiful Soup will use to parse the HTML document. The soup variable now contains a BeautifulSoup object. This is a parse tree generated from parsing the HTML document contained in page.text with the Python built-in html.parser.

Now, initialize a variable that will contain the list of all scraped data.

quotes = []

It is now time to use soup to extract elements from the DOM as follows:

quote_elements = soup.find_all('div', class_='quote')

The find_all() method will return the list of all <div> HTML elements identified by the quote class. In other terms, this line of code is equivalent to applying the .quote CSS selector to retrieve the list of quote HTML elements on the page. You can then iterate over the quotes list to retrieve the quote data as below:

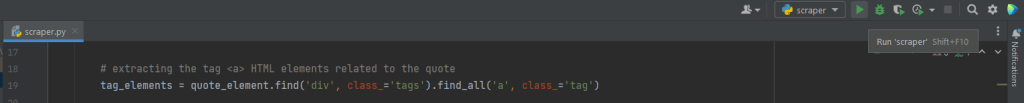

for quote_element in quote_elements:

# extracting the text of the quote

text = quote_element.find('span', class_='text').text

# extracting the author of the quote

author = quote_element.find('small', class_='author').text

# extracting the tag <a> HTML elements related to the quote

tag_elements = quote_element.find('div', class_='tags').find_all('a', class_='tag')

# storing the list of tag strings in a list

tags = []

for tag_element in tag_elements:

tags.append(tag_element.text)

Thanks to the Beautiful Soup find() method you can extract the single HTML element of interest. Since the tags associated with the quote are more than one, you should store them in a list.

Then, you can transform this data into a dictionary and append it to the quotes list as follows:

quotes.append(

{

'text': text,

'author': author,

'tags': ', '.join(tags) # merging the tags into a "A, B, ..., Z" string

}

)

Storing the extracted data in such a dictionary format will make your data easier to access and understand.

You just learned how to extract all quote data from a single page. But keep in mind that the target webste consists of several web pages. Let’s now learn how to crawl the entire website.

Implementing the crawling logic

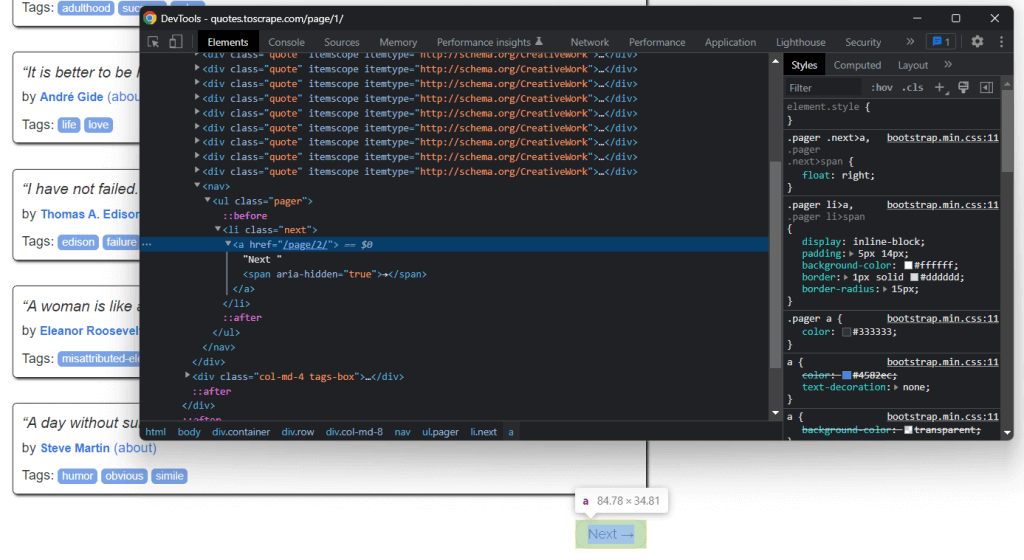

At the bottom of the home page, you can find a “Next →” <a> HTML element that redirects to the next page of the target website. This HTML element is contained on all but the last page. Such a scenario is common in any paginated website.

The “Next →” element

By following the link contained in the “Next →” <a> HTML element, you can easily navigate the entire website. So, let’s start from the home page and see how to go through each page that the target website consists of. All you have to do is look for the .next <li> HTML element and extract the relative link to the next page.

You can implement the crawling logic as follows:

# the url of the home page of the target website

base_url = 'https://quotes.toscrape.com'

# retrieving the page and initializing soup...

# getting the "Next →" HTML element

next_li_element = soup.find('li', class_='next')

# if there is a next page to scrape

while next_li_element is not None:

next_page_relative_url = next_li_element.find('a', href=True)['href']

# getting the new page

page = requests.get(base_url + next_page_relative_url, headers=headers)

# parsing the new page

soup = BeautifulSoup(page.text, 'html.parser')

# scraping logic...

# looking for the "Next →" HTML element in the new page

next_li_element = soup.find('li', class_='next')

This where cycle iterates over each page until there is no next page. Specifically, it extracts the relative URL of the next page and uses it to create the URL of the next page to scrape. Then, it downloads the next page. Next, it scrapes it and repeats the logic.

You just learned how to implement crawling logic to scrape an entire website. It is now time to see how to convert the extracted data into a more useful format.

Converting the data into CSV format

Let’s see how to convert the list of dictionaries containing the scraped quote data into a CSV file. Achieve this with the following lines:

import csv

# scraping logic...

# reading the "quotes.csv" file and creating it

# if not present

csv_file = open('quotes.csv', 'w', encoding='utf-8', newline='')

# initializing the writer object to insert data

# in the CSV file

writer = csv.writer(csv_file)

# writing the header of the CSV file

writer.writerow(['Text', 'Author', 'Tags'])

# writing each row of the CSV

for quote in quotes:

writer.writerow(quote.values())

# terminating the operation and releasing the resources

csv_file.close()

What this snippet does is write the quote data contained in the list of dictionaries in a quotes.csv file. Note that csv is part of the Python Standard Library. So, you can import and use it without installing an additional dependency. In detail, you simply have to create a file with open(). Then, you can populate it with the writerow() function from the Writer object of the csv library. This will write each quote dictionary as a CSV-formatted row in the CSV file.

You went from raw data contained in a website to structured data stored in a CSV file. The data extraction process is over and you can now take a look at the entire Python web scraper.

Putting it all together

This is what the complete Python web scraping script looks like:

import requests

from bs4 import BeautifulSoup

import csv

def scrape_page(soup, quotes):

# retrieving all the quote <div> HTML element on the page

quote_elements = soup.find_all('div', class_='quote')

# iterating over the list of quote elements

# to extract the data of interest and store it

# in quotes

for quote_element in quote_elements:

# extracting the text of the quote

text = quote_element.find('span', class_='text').text

# extracting the author of the quote

author = quote_element.find('small', class_='author').text

# extracting the tag <a> HTML elements related to the quote

tag_elements = quote_element.find('div', class_='tags').find_all('a', class_='tag')

# storing the list of tag strings in a list

tags = []

for tag_element in tag_elements:

tags.append(tag_element.text)

# appending a dictionary containing the quote data

# in a new format in the quote list

quotes.append(

{

'text': text,

'author': author,

'tags': ', '.join(tags) # merging the tags into a "A, B, ..., Z" string

}

)

# the url of the home page of the target website

base_url = 'https://quotes.toscrape.com'

# defining the User-Agent header to use in the GET request below

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/107.0.0.0 Safari/537.36'

}

# retrieving the target web page

page = requests.get(base_url, headers=headers)

# parsing the target web page with Beautiful Soup

soup = BeautifulSoup(page.text, 'html.parser')

# initializing the variable that will contain

# the list of all quote data

quotes = []

# scraping the home page

scrape_page(soup, quotes)

# getting the "Next →" HTML element

next_li_element = soup.find('li', class_='next')

# if there is a next page to scrape

while next_li_element is not None:

next_page_relative_url = next_li_element.find('a', href=True)['href']

# getting the new page

page = requests.get(base_url + next_page_relative_url, headers=headers)

# parsing the new page

soup = BeautifulSoup(page.text, 'html.parser')

# scraping the new page

scrape_page(soup, quotes)

# looking for the "Next →" HTML element in the new page

next_li_element = soup.find('li', class_='next')

# reading the "quotes.csv" file and creating it

# if not present

csv_file = open('quotes.csv', 'w', encoding='utf-8', newline='')

# initializing the writer object to insert data

# in the CSV file

writer = csv.writer(csv_file)

# writing the header of the CSV file

writer.writerow(['Text', 'Author', 'Tags'])

# writing each row of the CSV

for quote in quotes:

writer.writerow(quote.values())

# terminating the operation and releasing the resources

csv_file.close()

As learned here, in less than 100 lines of code you can build a web scraper. This Python script is able to crawl an entire website, automatically extract all its data, and convert it into a CSV file.

Congrats! You just learn how to build a Python web scraper with the Requests and Beautiful Soup libraries!

Running the web scraping Python script

If you are a PyCharm user, run the script by clicking the button below:

The PyCharm “Run” button

Otherwise, launch the following Python command in the terminal inside the project’s directory:

python scraper.py

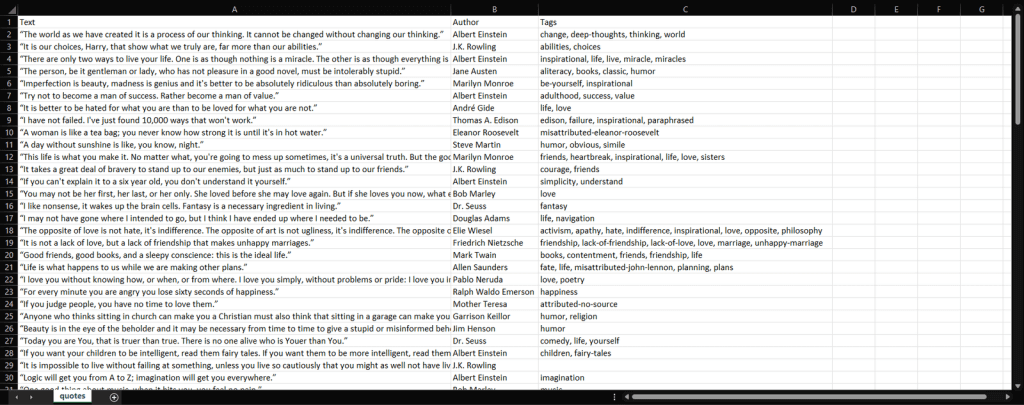

Wait for the process to end, and you will now have access to a quotes.csv file. Open it, and it should contain the following data:

The quotes.csv file

Et voilà! You now have all 100 quotes contained in the target website in one CSV file!

Conclusion

In this tutorial, you learned what web scraping is, what you need to get started with Python, and what are the best web scraping Python libraries. Then, you saw how to use Beautiful Soup and Requests to build a web scraping application through a real-world example. As you learned, web scraping in Python takes only a few lines of code.

However, web scraping comes with several challenges. In detail, anti-bot and anti-scraping technologies have become increasingly popular. This is why you need an advanced and complete automated web scraping tool, provided by Bright Data.

To avoid getting blocked, we also recommend choosing a proxy based on your use case from the different proxy services Bright Data provides.

FAQs

Is web scraping and crawling a part of data science?

Yes, web scraping and crawling are part of the greater field of data science. Scraping/crawling serve as the foundation for all other by-products that can be derived from structured, and unstructured data. This includes analytics, algorithmic models/output, insights, and ‘applicable knowledge’.

How do you scrape specific data from a website in Python?

Scraping data from a website using Python entails inspecting the page of your target URL, identifying the data you would like to extract, writing and running the data extraction code, and finally storing the data in your desired format.

How do you build a web scraper with Python?

The first step to building a Python data scraper is utilizing string methods in order to parse website data, then parsing website data using an HTML parser, and finally interacting with necessary forms and website components.