Learn to perform web scraping with Jsoup in Java to automatically extract all data from an entire website.

In this guide, we will cover the following:

What is Jsoup?

Prerequisites

How To Build a Web Scraper Using Jsoup

Conclusion

What Is Jsoup?

Jsoup is a Java HTML parser. In other words, Jsoup is a Java library that allows you to parse any HTML document. With Jsoup, you can parse a local HTML file or download a remote HTML document from a URL.

Jsoup also offers a broad range of methods to deal with the DOM. In detail, you can use CSS selectors and Jquery-like methods to select HTML elements and extract data from them. This makes Jsoup an effective web-scraping Java library for beginners and professionals.

Note that Jsoup is not the only library to perform web scraping in Java. HtmlUnit is another web-scraping popular Java library. Take a look at our HtmlUnit guide on web scraping in Java.

Prerequisites

Before you write the first line of code, you have to meet the prerequisites below:

Java >= 8: any version of Java greater than or equal to 8 will do. Downloading and installing an LTS (Long Term Support) version of Java is recommended. Specifically, this tutorial is based on Java 17. At the time of writing, Java 17 is the latest LTS version of Java.

Maven or Gradle: you can choose whichever Java building automation tool you prefer. Specifically, you will need Maven or Gradle for their dependency management functionality.

An advanced IDE supporting Java: any IDE that supports Java with Maven or Gradle is fine. This tutorial is based on IntelliJ IDEA, which is probably the best Java IDE available.

Follow the links above to download and install everything you need to meet all the prerequisites. In order, set up Java, Maven, or Gradle, and an IDE for Java. Follow the official installation guides to avoid common problems and issues.

Let’s now verify that you meet all prerequisites.

Verify that Java is configured correctly

Open your terminal. You can verify that you installed Java and set up the Java PATH correctly with the command below:

java -version

This command should print something similar to what follows:

java version "17.0.5" 2022-10-18 LTS

Java(TM) SE Runtime Environment (build 17.0.5+9-LTS-191)

Java HotSpot(TM) 64-Bit Server VM (build 17.0.5+9-LTS-191, mixed mode, sharing)

Verify that Maven or Gradle is installed

If you chose Maven, run the following command in your terminal:

mvn -v

You should get some info about the version of Maven you configured, as follows:

Apache Maven 3.8.6 (84538c9988a25aec085021c365c560670ad80f63)

Maven home: C:\Maven\apache-maven-3.8.6

Java version: 17.0.5, vendor: Oracle Corporation, runtime: C:\Program Files\Java\jdk-17.0.5

Default locale: en_US, platform encoding: Cp1252

OS name: "windows 11", version: "10.0", arch: "amd64", family: "windows"

While if you opted for Gradle, execute in your terminal:

gradle -v

Similarly, this should print some info about the version of Gradle you installed, as below:

------------------------------------------------------------

Gradle 7.5.1

------------------------------------------------------------

Build time: 2022-08-05 21:17:56 UTC

Revision: d1daa0cbf1a0103000b71484e1dbfe096e095918

Kotlin: 1.6.21

Groovy: 3.0.10

Ant: Apache Ant(TM) version 1.10.11 compiled on July 10 2021

JVM: 17.0.5 (Oracle Corporation 17.0.5+9-LTS-191)

OS: Windows 11 10.0 amd64

Great! You are now ready to learn how to perform web scraping with Jsoup in Java!

How To Build a Web Scraper Using Jsoup

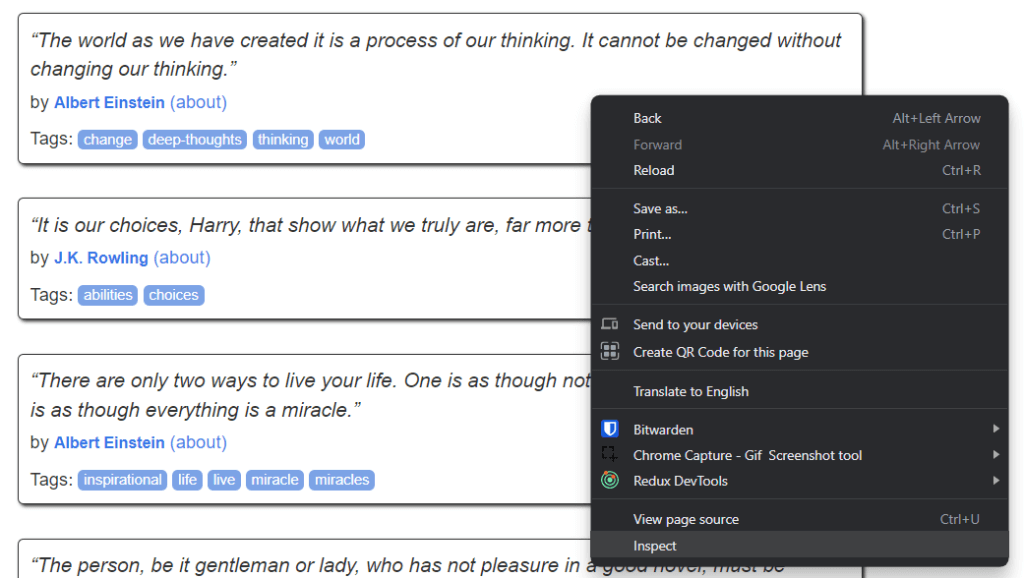

Here, you are going to learn how to build a script for web scraping with Jsoup. This script will be able to automatically extract data from a website. In detail, the target website is Quotes to Scrape. If you are not familiar with this project, it is nothing more than a sandbox for web scraping.

This is what the Quotes to Scrape page looks like:

Quotes to Scrape in a nutshell

As you can see, the target website simply contains a paginated list of quotes. The goal of the Jsoup web scraper is to go through each page, retrieve all quotes, and return this data in CSV format.

Now, follow this step-by-step Jsoup tutorial and learn how to build a simple web scraper!

Step 1: Set up a Java Project

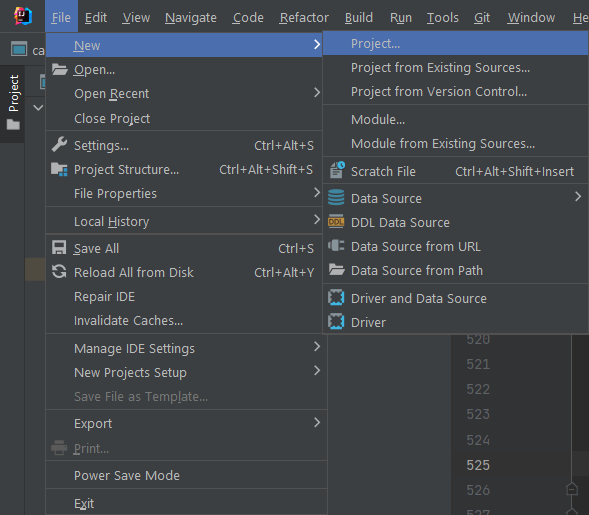

Here, you are about to see how you can initialize a Java project in IntelliJ IDEA 2022.2.3. Note that any other IDE will be fine. In IntelliJ IDEA, it only takes a bunch of clicks to set up a Java project. Launch IntelliJ IDEA and wait for it to load. Then, select the File > New > Project... option in the top menu.

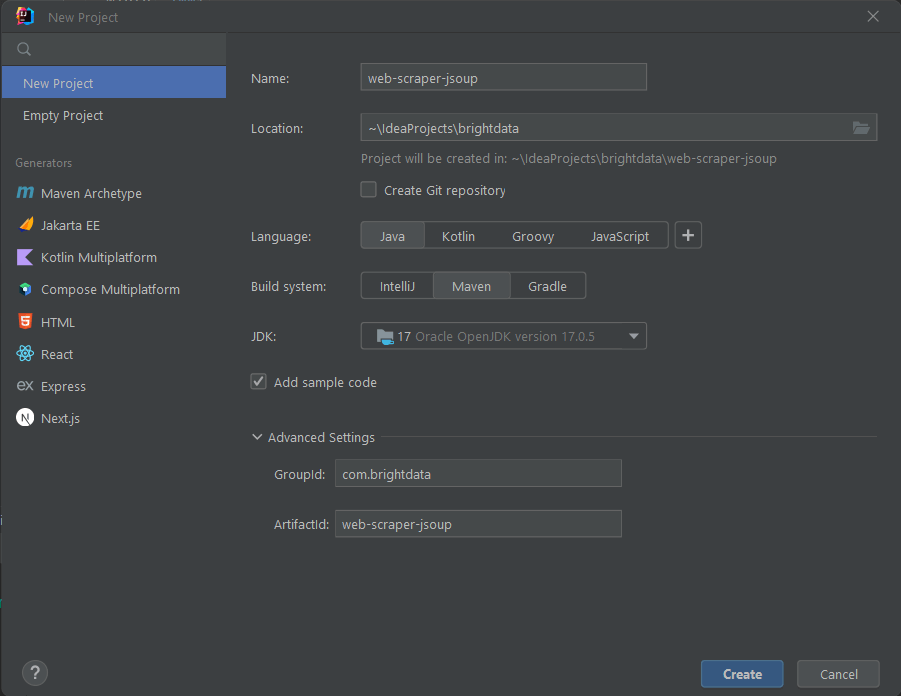

Now, initialize your Java project in the New Project popup as follows:

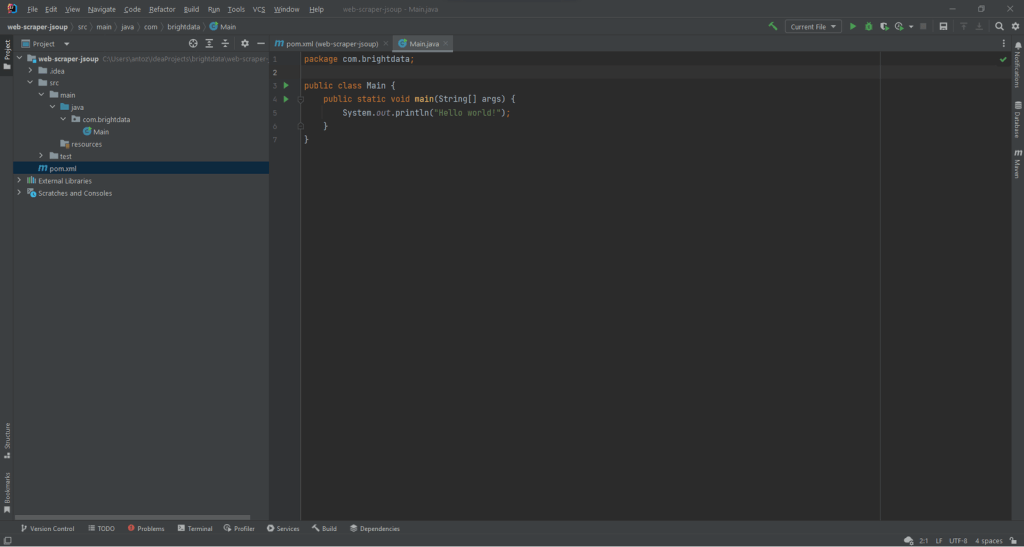

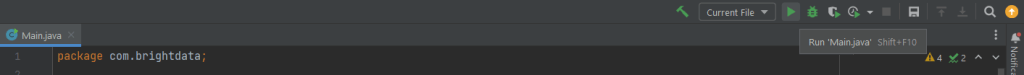

Give your project a name and location, select Java as programming language, and choose between Maven or Gradle depending on the build tool you installed. Click the Create button and wait for IntelliJ IDEA to initialize your Java project. You should now be seeing the following empty Java project:

IltelliJ IDEA creates the Main.java class automatically

It is now time to install Jsoup and start scraping data from the Web!

Step 2: Install Jsoup

If you are a Maven user, add the lines below inside the dependencies tag of your pom.xml file:

<dependency>

<groupId>org.jsoup</groupId>

<artifactId>jsoup</artifactId>

<version>1.15.3</version>

</dependency>

Your Maven pom.xml file should now look like as follows:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.brightdata</groupId>

<artifactId>web-scraper-jsoup</artifactId>

<version>1.0-SNAPSHOT</version>

<properties>

<maven.compiler.source>17</maven.compiler.source>

<maven.compiler.target>17</maven.compiler.target>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

</properties>

<dependencies>

<dependency>

<groupId>org.jsoup</groupId>

<artifactId>jsoup</artifactId>

<version>1.15.3</version>

</dependency>

</dependencies>

</project>

Alternatively, if you are a Gradle user, add this line to the dependencies object of your build.gradle file:

implementation "org.jsoup:jsoup:1.15.3"

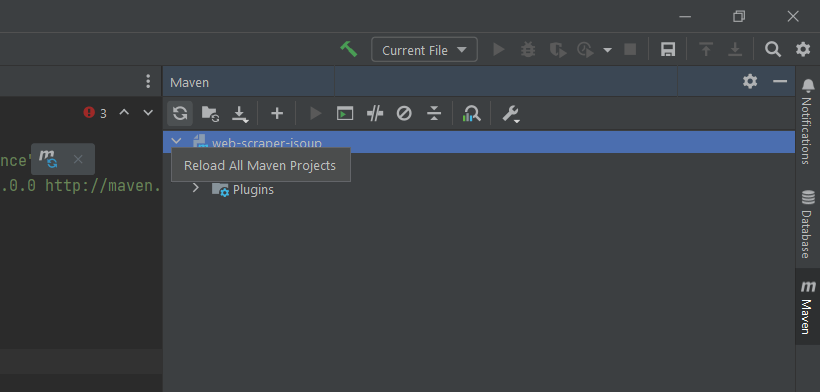

You just added jsoup to your project’s dependencies. It is now time to install it. On IntelliJ IDEA, click on the Gradle/Maven reload button below:

The Maven reload button

This will install the jsoup dependency. Wait for the installation process to end. You now have access to all Jsoup features. You can verify that Jsoup was installed correctly by adding this import line on top of your Main.java file:

import org.jsoup.*;

If IntelliJ IDEA reports no error, it means that you can now use Jsoup in your Java web scraping script.

Let’s now code a web scraper with Jsoup!

Step 3: Connect to your target web page

You can use Jsoup to connect to your target website in a single line of code:

// downloading the target website with an HTTP GET request

Document doc = Jsoup.connect("https://quotes.toscrape.com/").get();

Thanks to the Jsoup connect() method, you can connect to a website. What happens behind the scene is that Jsoup performs an HTTP GET request to the URL specified as a parameter, get the HTML document returned by the target server, and store it in the doc Jsoup Document object.

Keep in mind that if connect() fails, Jsoup will raise an IOException. This may occur for several reasons. However, you should be aware that many websites block requests that do not involve a valid User-Agent header. If you are not familiar with this, the User-Agent header is a string value that identifies the application and OS version from which a request originates. Find out more about User-Agents for web scraping.

You can specify a User-Agent header in Jsoup as below:

Document doc = Jsoup

.connect("https://quotes.toscrape.com/")

.userAgent("Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/107.0.0.0 Safari/537.36")

.get();

Specifically, the Jsoup userAgent() method allows you to set the User-Agent header. Note that you set any other HTTP header a value through the header() method.

Your Main.java class should now look like as follows:

package com.brightdata;

import org.jsoup.*;

import org.jsoup.nodes.*;

import java.io.IOException;

public class Main {

public static void main(String[] args) throws IOException {

// downloading the target website with an HTTP GET request

Document doc = Jsoup

.connect("https://quotes.toscrape.com/")

.userAgent("Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/107.0.0.0 Safari/537.36")

.get();

}

}

Let’s start analyzing the target website to learn how to extract data from it.

Step 4: Inspect the HTML page

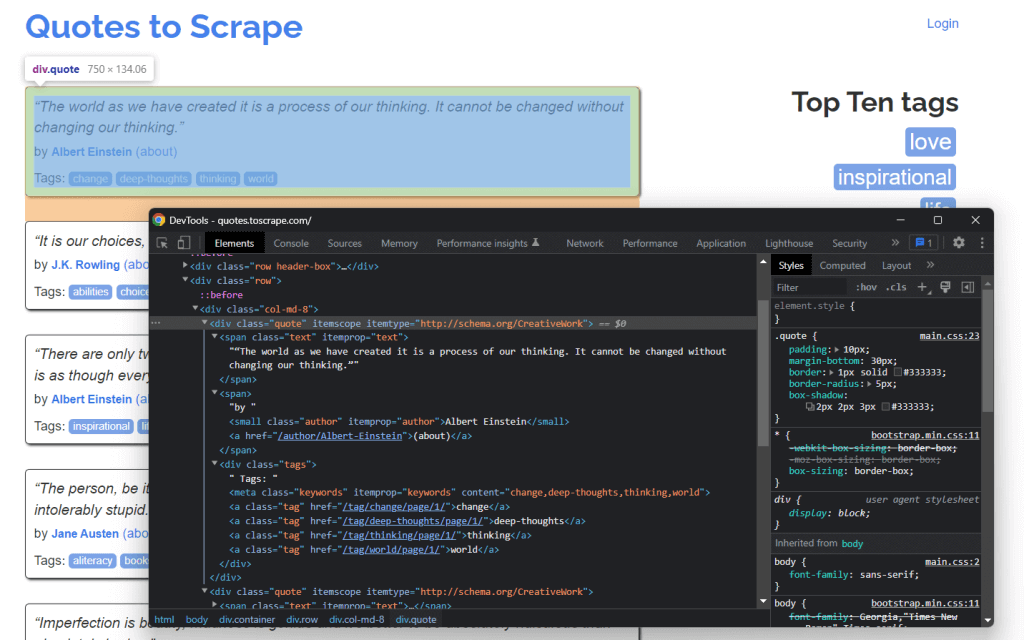

If you want to extract data from an HTML document, you must first analyze the HTML code of the web page. First, you have to identify the HTML elements that contain the data you want to scrape. Then, you have to find a way to select these HTML elements.

You can achieve all this through your browser’s developer tools. In Google Chrome or any Chromium-based browser, right-click an HTML element shwoing some data of interest. Then, select Inspect.

This is what you should now be seeing:

By digging into the HTML code, you can see that each quote is wrapped in a <div> HTML. In detail, this <div> element contains:

A

<span>HTML element containing the text of the quoteA

<small>HTML element containing the name of the authorA

<div>element with a list of<a>HTML elements containing the tags associated with the quote.

Now, take a look at the CSS classes used by these HTML elements. Thanks to them, you can define the CSS selectors you need to extract those HTML elements from the DOM. Specifically, you can retrieve all the data associated with a quote by applying the CSS selectors on .quote below:

.text.author.tags .tag

Let’s now learn how you can do this in Jsoup.

Step 5: Select HTML elements with Jsoup

The Jsoup Document class offers several ways to select HTML elements from the DOM. Let’s dig into the most important ones.

Jsoup allows you to extract HTML elements based on their tags:

// selecting all <div> HTML elements

Elements divs = doc.getElementsByTag("div");

This will return the list of <div> HTML elements contained in the DOM.

Similarly, you can select HTML elements by class:

// getting the ".quote" HTML element

Elements quotes = doc.getElementsByClass("quote");

If you want to retrieve a single HTML element based on its id attribute, you can use:

// getting the "#quote-1" HTML element

Element div = doc.getElementById("quote-1");

You can also select HTML elements by an attribute:

// selecting all HTML elements that have the "value" attribute

Elements htmlElements = doc.getElementsByAttribute("value");

Or that contains a particular piece of text:

// selecting all HTML elements that contain the word "for"

Elements htmlElements = doc.getElementsContainingText("for");

These are just a few examples. Keep in mind that Jsoup comes with more than 20 different approaches to selecting HTML elements from a web page. See them all.

As learned before, CSS selectors are an effective way to select HTML elements. You can apply a CSS selector to retrieve elements in Jsoup through the select() method:

// selecting all quote HTML elements

Elements quoteElements = doc.getElementsByClass(".quote");

Since Elements extends ArrayList, you can iterate over it to get every Jsoup Element. Note that you can apply all HTML selection methods also to a single Element. This will restrict the selection logic to the children of the selected HTML element.

So, you can select the desired HTML elements on each .quote as below:

for (Element quoteElement: quoteElements) {

Element text = quoteElement.select(".text").first();

Element author = quoteElement.select(".author").first();

Elements tags = quoteElement.select(".tag");

}

Let’s now learn how to extract data from these HTML elements.

Step 6: Extract data from a web page with Jsoup

First, you need a Java class where you can store the scraped data. Create a Quote.java file in the main package and initialize it as follows:

package com.brightdata;

package com.brightdata;

public class Quote {

private String text;

private String author;

private String tags;

public String getText() {

return text;

}

public void setText(String text) {

this.text = text;

}

public String getAuthor() {

return author;

}

public void setAuthor(String author) {

this.author = author;

}

public String getTags() {

return tags;

}

public void setTags(String tags) {

this.tags = tags;

}

}

Now, let’s extend the snippet presented at the end of the previous section. Extract the desired data from the selected HTML elements and store it in Quote objects as follows:

// initializing the list of Quote data objects

// that will contain the scraped data

List<Quote> quotes = new ArrayList<>();

// retrieving the list of product HTML elements

// selecting all quote HTML elements

Elements quoteElements = doc.select(".quote");

// iterating over the quoteElements list of HTML quotes

for (Element quoteElement : quoteElements) {

// initializing a quote data object

Quote quote = new Quote();

// extracting the text of the quote and removing the

// special characters

String text = quoteElement.select(".text").first().text()

.replace("“", "")

.replace("”", "");

String author = quoteElement.select(".author").first().text();

// initializing the list of tags

List<String> tags = new ArrayList<>();

// iterating over the list of tags

for (Element tag : quoteElement.select(".tag")) {

// adding the tag string to the list of tags

tags.add(tag.text());

}

// storing the scraped data in the Quote object

quote.setText(text);

quote.setAuthor(author);

quote.setTags(String.join(", ", tags)); // merging the tags into a "A, B, ..., Z" string

// adding the Quote object to the list of the scraped quotes

quotes.add(quote);

}

Since each quote can have more than one tag, you can store them all in a Java List. Then, you can use the String.join() method to reduce the list of strings to a single string. You can finally store this string in the quote object.

At the end of the for loop, quotes will store all quote data extracted from the home page of the target website. But the target website consists of many pages!

Let’s learn how to use Jsoup to crawl an entire website.

Step 7: How to crawl the entire website with Jsoup

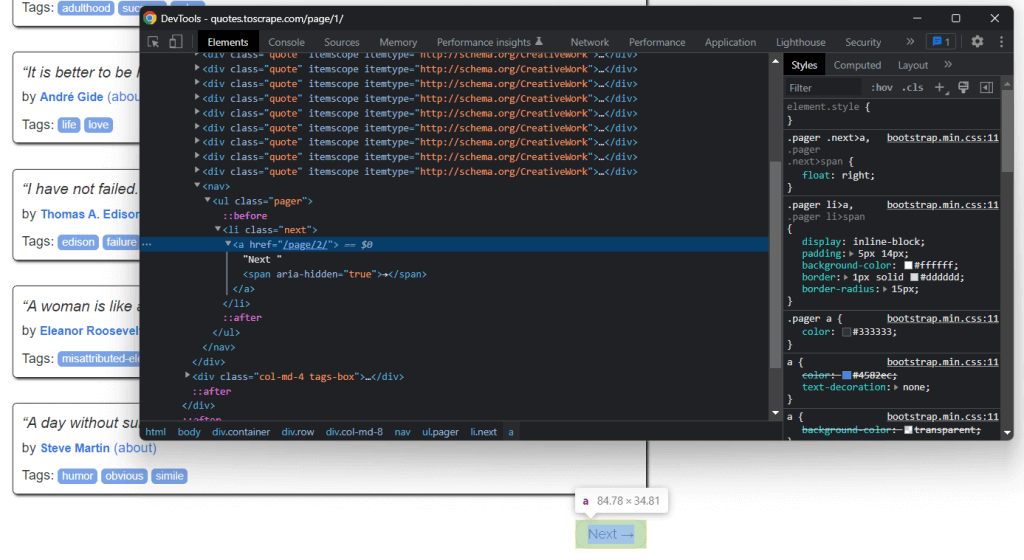

If you look closely at Quotes to Scrape home page, you will note a “Next →” button. Inspect this HTML element with your browser’s developer tools. Right-click on it, and select Inspect.

Here, you can notice that the “Next →” button is <li> HTML element. This contains an <a> HTML element that stores the relative URL to the next page. Note that you can find the “Next →” button on all but the last page of the target website. Most paginated websites follow such an approach.

By extracting the link stored in that <a> HTML element, you can get the next page to scrape. So, if you want to scrape the entire website, follow the logic below:

Search for the

.nextHTML elementif present, extract the relative URL contained in its

<a>child and move to 2.if not present, this is the last page and you can stop here

Concatenate the relative URL extracted by the

<a>HTML element with the base URL of the websiteUse the complete URL to connect to the new page

Scrape the data from the new page

Go to 1.

This is what web crawling is about. You can crawl a paginated website with Jsoup as follows:

// the URL of the target website's home page

String baseUrl = "https://quotes.toscrape.com";

// initializing the list of Quote data objects

// that will contain the scraped data

List<Quote> quotes = new ArrayList<>();

// retrieving the home page...

// looking for the "Next →" HTML element

Elements nextElements = doc.select(".next");

// if there is a next page to scrape

while (!nextElements.isEmpty()) {

// getting the "Next →" HTML element

Element nextElement = nextElements.first();

// extracting the relative URL of the next page

String relativeUrl = nextElement.getElementsByTag("a").first().attr("href");

// building the complete URL of the next page

String completeUrl = baseUrl + relativeUrl;

// connecting to the next page

doc = Jsoup

.connect(completeUrl)

.userAgent("Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/107.0.0.0 Safari/537.36")

.get();

// scraping logic...

// looking for the "Next →" HTML element in the new page

nextElements = doc.select(".next");

}

As you can see, you can implement the crawling logic explained above with a simple while cycle. It only takes a few lines of code. Specifically, you need to follow a do ... while approach.

Congrats! You are now able to crawl an entire site. All that is left is to learn how to convert the scraped data into a more useful format.

Step 8: Export scraped data to CSV

You can convert the scraped data into a CSV file as follows:

// initializing the output CSV file

File csvFile = new File("output.csv");

// using the try-with-resources to handle the

// release of the unused resources when the writing process ends

try (PrintWriter printWriter = new PrintWriter(csvFile)) {

// iterating over all quotes

for (Quote quote : quotes) {

// converting the quote data into a

// list of strings

List<String> row = new ArrayList<>();

// wrapping each field with between quotes

// to make the CSV file more consistent

row.add("\"" + quote.getText() + "\"");

row.add("\"" +quote.getAuthor() + "\"");

row.add("\"" +quote.getTags() + "\"");

// printing a CSV line

printWriter.println(String.join(",", row));

}

}

This snippet converts the quote into CSV format and stores it in an output.csv file. As you can see, you do not need an additional dependency to achieve this. All you have to do is initialize a CSV file with File. Then, you can populate with a PrintWriter by printing each quote as a CSV-formatted row in the output.csv file.

Note that you should always close a PrintWriter when you no longer need it. In detail, the try-with-resources above will ensure that the PrintWriter instance gets closed at the end of the try statement.

You started from navigating a website and you can now scrape all its data to store it in a CSV file. It is now time to have a look at the complete Jsoup web scraper.

Putting it all together

This is what the complete Jsoup web scraping script in Java looks like:

package com.brightdata;

import org.jsoup.*;

import org.jsoup.nodes.*;

import org.jsoup.select.Elements;

import java.io.File;

import java.io.IOException;

import java.io.PrintWriter;

import java.nio.charset.StandardCharsets;

import java.util.ArrayList;

import java.util.List;

public class Main {

public static void main(String[] args) throws IOException {

// the URL of the target website's home page

String baseUrl = "https://quotes.toscrape.com";

// initializing the list of Quote data objects

// that will contain the scraped data

List<Quote> quotes = new ArrayList<>();

// downloading the target website with an HTTP GET request

Document doc = Jsoup

.connect(baseUrl)

.userAgent("Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/107.0.0.0 Safari/537.36")

.get();

// looking for the "Next →" HTML element

Elements nextElements = doc.select(".next");

// if there is a next page to scrape

while (!nextElements.isEmpty()) {

// getting the "Next →" HTML element

Element nextElement = nextElements.first();

// extracting the relative URL of the next page

String relativeUrl = nextElement.getElementsByTag("a").first().attr("href");

// building the complete URL of the next page

String completeUrl = baseUrl + relativeUrl;

// connecting to the next page

doc = Jsoup

.connect(completeUrl)

.userAgent("Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/107.0.0.0 Safari/537.36")

.get();

// retrieving the list of product HTML elements

// selecting all quote HTML elements

Elements quoteElements = doc.select(".quote");

// iterating over the quoteElements list of HTML quotes

for (Element quoteElement : quoteElements) {

// initializing a quote data object

Quote quote = new Quote();

// extracting the text of the quote and removing the

// special characters

String text = quoteElement.select(".text").first().text();

String author = quoteElement.select(".author").first().text();

// initializing the list of tags

List<String> tags = new ArrayList<>();

// iterating over the list of tags

for (Element tag : quoteElement.select(".tag")) {

// adding the tag string to the list of tags

tags.add(tag.text());

}

// storing the scraped data in the Quote object

quote.setText(text);

quote.setAuthor(author);

quote.setTags(String.join(", ", tags)); // merging the tags into a "A; B; ...; Z" string

// adding the Quote object to the list of the scraped quotes

quotes.add(quote);

}

// looking for the "Next →" HTML element in the new page

nextElements = doc.select(".next");

}

// initializing the output CSV file

File csvFile = new File("output.csv");

// using the try-with-resources to handle the

// release of the unused resources when the writing process ends

try (PrintWriter printWriter = new PrintWriter(csvFile, StandardCharsets.UTF_8)) {

// to handle BOM

printWriter.write('\ufeff');

// iterating over all quotes

for (Quote quote : quotes) {

// converting the quote data into a

// list of strings

List<String> row = new ArrayList<>();

// wrapping each field with between quotes

// to make the CSV file more consistent

row.add("\"" + quote.getText() + "\"");

row.add("\"" +quote.getAuthor() + "\"");

row.add("\"" +quote.getTags() + "\"");

// printing a CSV line

printWriter.println(String.join(",", row));

}

}

}

}

As shown here, you can implement a web scraper in Java in less than 100 lines of code. Thanks to Jsoup, you can connect to a website, crawl it entirely, and automatically extract all its data. Then, you can write the scraped data in a CSV file. This is what this Jsoup web scraper is about.

In IntelliJ IDEA, launch the web scraping Jsoup script by clicking the button below:

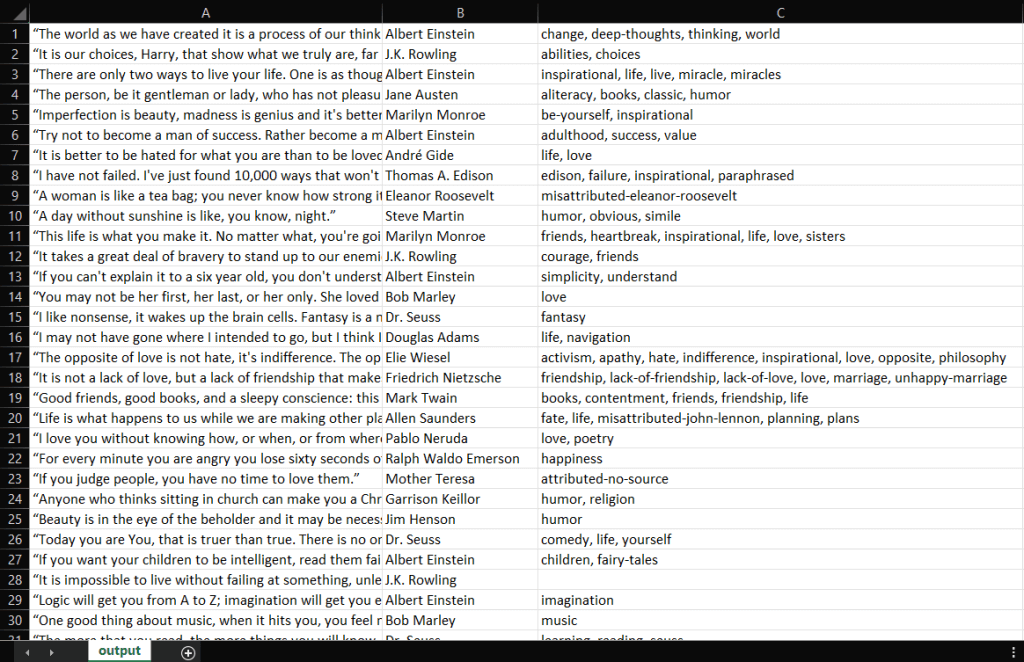

IntelliJ IDEA will compile the Main.java file and execute the Main class. At the end of the scraping process, you will find an output.csv file in the root directory of the project. Open it, and it should contain this data:

Well done! You now have a CSV file containing all 100 quotes of Quotes to Scrape! This means that you just learn how to build a web scraper with Jsoup!

Conclusion

In this tutorial, you learned what you need to get started building a web scraper, what Jsoup is, and how you can use it to scrape data from the Web. In detail, you saw how to use Jsoup to build a web scraping application through a real-world example. As you learned, web scraping with Jsoup in Java involves only a bunch of lines of code.

Yet, web scraping is not that easy. This is because there are several challenges you have to face. Do not forget that anti-bot and anti-scraping technologies are now more popular than ever. All you need is a powerful and fully-featured web scraping tool, provided by Bright Data. Don’t want to deal with scraping at all? Take a look at our datasets.

If you want to learn more about how to avoid getting blocked, you can adopt a proxy based on your use case from one of the many proxy services available in Bright Data.